This month’s TSQL2sday is hosted by Steve Jones (b|t) and this time the topic is the experience we all have with database triggers, one of the most controversial database objects you can work with… Continue reading “T-SQL Tuesday #106 – Triggers Headaches or Happiness”

This month’s TSQL2sday is hosted by Steve Jones (b|t) and this time the topic is the experience we all have with database triggers, one of the most controversial database objects you can work with… Continue reading “T-SQL Tuesday #106 – Triggers Headaches or Happiness”

Category: #TSQL2sday

T-SQL Tuesday #101 – The Essential SQL Server Tools in my stack

This month’s TSQL2sday is hosted by Jens Vestergaard (b|t) and the topic is about SQL Server tools that we, Database Administrators, Architects, and Developers use every day to work on our tasks.

This month’s TSQL2sday is hosted by Jens Vestergaard (b|t) and the topic is about SQL Server tools that we, Database Administrators, Architects, and Developers use every day to work on our tasks.

It’s a great topic, isn’t it

Personally, I think that the tools we use are of great importance for our daily work. Many of you may disagree by saying that skills are much more important than tools. Of course, I completely agree with this statement. The knowledge, experience, and skills enable us to do our work even without proper tools. However, it doesn’t mean that software we use doesn’t matter. Many applications, utilities, and frameworks can automate and speed up our daily activities. Thanks to that we can achieve more in a shorter time. That’s the reason why I blog about various SQL Server tools quite often (see posts in Tools category).

The SQL Server tools I use

I don’t have a long list of fancy and unknown applications I use. I think that most of them are well known in SQL Server community. Nevertheless, I decided to describe them for you. Maybe you will find something interesting here…

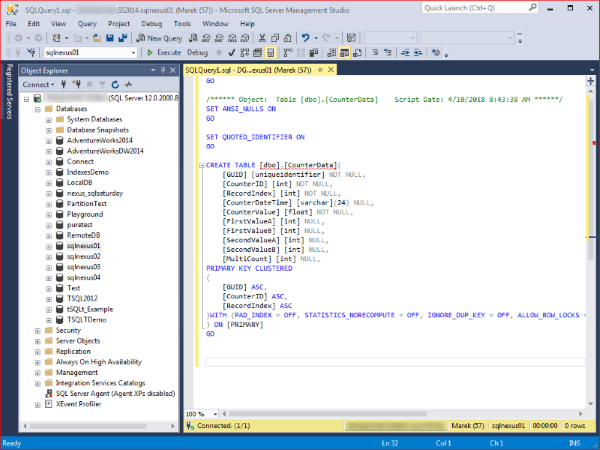

Server and Database Management

The first, and probably the most used by me tool is SQL Server Management Studio (SSMS) (download). I think I do not have to present it to anyone. It’s Swiss Army Knife tool for SQL Server specialists. Before SQL Server 2017 it was an inseparable element of every release. Since version 16, it is a separate application with a short release cycle. Every few weeks, Microsoft releases a new version and each of them delivers some new amazing features like XE Profiler, Data Discovery & Classification, Vulnerability Assessment, Import Flat File Wizard, and many, many more. I cannot imagine working with SQL Server without SSMS.

The first, and probably the most used by me tool is SQL Server Management Studio (SSMS) (download). I think I do not have to present it to anyone. It’s Swiss Army Knife tool for SQL Server specialists. Before SQL Server 2017 it was an inseparable element of every release. Since version 16, it is a separate application with a short release cycle. Every few weeks, Microsoft releases a new version and each of them delivers some new amazing features like XE Profiler, Data Discovery & Classification, Vulnerability Assessment, Import Flat File Wizard, and many, many more. I cannot imagine working with SQL Server without SSMS.

Despite the fact that SSMS is so popular, Microsoft decided to create another tool for database professionals. SQL Operations Studio (SQLOps) (download) is a new application from Microsoft designed to make our life easier. It’s a free and open source data management tool that enables you to work with SQL Server, Azure SQL DB and SQL DW from Windows, macOS, and Linux. This light-weight multi-platform solution can be used for SQL Server database development, administration, and monitoring. I described it more in details in this post.

T-SQL Source Code Management

For database source code management, I use SQL Server Data Tools (SSDT) (download) and Git for Windows (download). Thanks to SSDT we can create SQL Database projects in the same way you can create .NET projects in Visual Studio. These projects can be built and validated, and then the compiled artifact in the form of a DACPAC file can be easily deployed against the target database.

For database source code management, I use SQL Server Data Tools (SSDT) (download) and Git for Windows (download). Thanks to SSDT we can create SQL Database projects in the same way you can create .NET projects in Visual Studio. These projects can be built and validated, and then the compiled artifact in the form of a DACPAC file can be easily deployed against the target database.

To automate the DACPAC deployment process my team uses sqlpackage.exe from Data-Tier Application Framework (DACFx) (download) wrapped in our own PowerShell module. We decided to create our own wrapper because this gives us required flexibility and makes our database deployment process more streamlined.

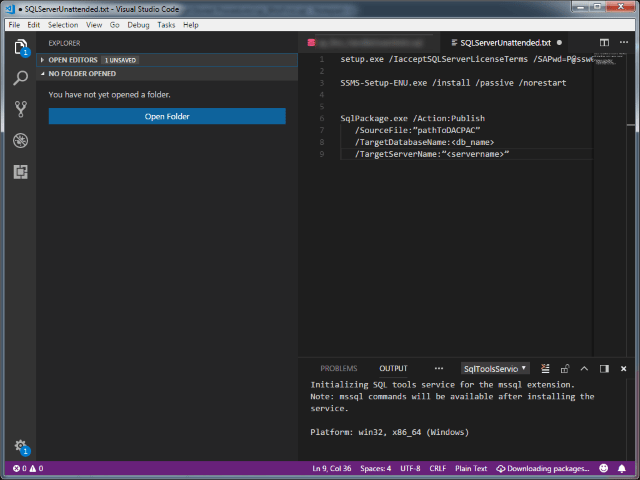

For text/SQL files

SSMS and SSDT are great and very powerful SQL Server tools for work with *.sql files. However, sometimes I find that I don’t need to use such big and complex tool for performing easy and quick tasks on text/SQL files. It may include things like opening file just for a quick view or quick search. I used to use Notepad++ (download) for such kind of activities, but recently I’m trying to make myself more familiar with Visual Studio Code (download).

SSMS and SSDT are great and very powerful SQL Server tools for work with *.sql files. However, sometimes I find that I don’t need to use such big and complex tool for performing easy and quick tasks on text/SQL files. It may include things like opening file just for a quick view or quick search. I used to use Notepad++ (download) for such kind of activities, but recently I’m trying to make myself more familiar with Visual Studio Code (download).

Visual Studio Code is a lightweight and extensible multi-platform source code editor which can be used on Windows, macOS, and Linux. Microsoft puts a lot of effort in the development of this solution. It comes with a built-in support for JavaScript, TypeScript, and Node.js and has a lot of extensions for other languages (such as T-SQL, C#, C++, Java, Python,…).

Visual Studio Code is a lightweight and extensible multi-platform source code editor which can be used on Windows, macOS, and Linux. Microsoft puts a lot of effort in the development of this solution. It comes with a built-in support for JavaScript, TypeScript, and Node.js and has a lot of extensions for other languages (such as T-SQL, C#, C++, Java, Python,…).

For text file comparison I use WinMerge (download). It is a free and an Open Source differencing and merging tool for Windows. It can compare both folders and files, presenting differences in a visual text format that is easy to understand and handle.

For text file comparison I use WinMerge (download). It is a free and an Open Source differencing and merging tool for Windows. It can compare both folders and files, presenting differences in a visual text format that is easy to understand and handle.

Database Unit Tests

To test database code I use tSQLt (download). tSQLt is a free and opensource database unit testing framework for Microsoft SQL Server, completely written in T-SQL and .NET CLR. Additionally, besides the long list of available assertions, it also has the great and rare functionality to mock various database objects. In my opinion that makes it better then tests in SSDT.

To test database code I use tSQLt (download). tSQLt is a free and opensource database unit testing framework for Microsoft SQL Server, completely written in T-SQL and .NET CLR. Additionally, besides the long list of available assertions, it also has the great and rare functionality to mock various database objects. In my opinion that makes it better then tests in SSDT.

In addition to the above, I recently started to use SQLCover (download) created by Ed Elliott (b|t). It’s one more free and open source solution, this time for unit tests code coverage report generation. It’s amazing how well it works.

Community scripts/tools

Besides all of the above-mentioned SQL Server tools, I have my favorite set of well-known T-SQL scripts or procedures I use commonly for troubleshooting. The first and probably most-known solution is sp_whoisactive (download) procedure from Adam Machanic (b|t). It’s something like a powerful combination of sp_who2, Activity Monitor, and many other diagnostic queries based on DMVs. If you’ve never used it before, then you should try it now!

Other scripts I use quite often are stored procedures from Brent Ozar ULTD First Responder Kit (download). This bundle consists of such tools like sp_Blitz, sp_BlitzFirst, sp_BlitzCache, and a few others also very useful.

Other scripts I use quite often are stored procedures from Brent Ozar ULTD First Responder Kit (download). This bundle consists of such tools like sp_Blitz, sp_BlitzFirst, sp_BlitzCache, and a few others also very useful.

Troubleshooting Tools

In very difficult situations, when above scripts are not enough for issue troubleshooting, I use PSSdiag (download) and SQLNexus (download). Both were developed in Microsoft as side projects mainly used by SQL Server Customer Support Service and Product Support Service teams. Now, public versions are free and opensource and can be used by everyone.

PSSdiag is a data collector utility that can capture various interesting data sets, counters, and metrics. For example:

- Event Logs

- PerfMon counters

- Server configuration

- Error Logs

- Profiler Trace / XE Session

- Blocking information

- Query statistics

- and many others…

If that would not be enough it also can be extended by custom collector scripts. This gives the user the ability collect all the necessary information.

SQLNexus analyses the data collected by PSSdiag and generates very detailed reports that describe monitored SQL Server state and health. They are invaluable in troubleshooting SQL Server issues.

In case you’re interested in possibilities that these tools give, I encourage you to read my other blog post describing PSSdiag and SQLNexus use case.

SQL Server tools that are on my check-it-out list

In addition to all of these tools I already use, there are others I still want to try out.

dbatools (download) is a PowerShell module with more than 300 SQL Server administration, best practice and migration commands included. Thanks to the huge number of contributors from SQL Server community it’s growing and getting better and better.

dbatools (download) is a PowerShell module with more than 300 SQL Server administration, best practice and migration commands included. Thanks to the huge number of contributors from SQL Server community it’s growing and getting better and better.

The same amazing group of people created dbachecks (download) PowerShell module. It’s a solution for automated environment validation. It’s based on Pester and has already more than 80 checks implemented.

The same amazing group of people created dbachecks (download) PowerShell module. It’s a solution for automated environment validation. It’s based on Pester and has already more than 80 checks implemented.

Last but not least is dbareports (download). It uses PowerShell, T-SQL and SQL Agent to gather information about your SQL Server estate. It also comes with beautiful SSRS reports and PowerBi and Cortana Integration.

Last but not least is dbareports (download). It uses PowerShell, T-SQL and SQL Agent to gather information about your SQL Server estate. It also comes with beautiful SSRS reports and PowerBi and Cortana Integration.

All of these solutions wouldn’t exist without Chrissy LeMaire (b|t) and Rob Sewell aka SQL DBA with A Beard (b|t) who started all of this! Thanks to their and SQL Server community effort we have a plenty of great SQL Server tools for our use.

-Marek

Share it:T-SQL Tuesday #98 – Technical challenge

This month’s TSQL2sday is hosted by BlobEater (Arun Sirpal b|t) and the topic is about solving a technical challenge you faced in the past.

This month’s TSQL2sday is hosted by BlobEater (Arun Sirpal b|t) and the topic is about solving a technical challenge you faced in the past.

It’s another great subject for writing a blog post. Troubleshooting, issue solving, and root cause analysis are very exciting aspects of every DBA’s work. It will be a great experience to read all these posts describing various kinds of issues that DBAs from around the world have to deal with.

However, it’s not an easy task to write such post. I had plenty of ideas what I could describe but after a moment of thought, everything seemed to be obvious and not interesting at all. Finally, after few days of intensive thinking, I decided what to write about.

“Application is freezing”

Three years ago I was working with a customer who was reporting an application freeze issue. A few weeks before that they upgraded the application to the newer version. Since then, everything was fine for some time, but recently they started to observe an application freeze issue. Each time it occurred, the application was unresponsive for most of the users. It was very non-deterministic behavior. Freeze appeared once every few days at different times of day. Usually, they took few minutes and always were resolved without any manual intervention.

At the very beginning, the major part of investigation activities was focused mainly on the network and a Citrix farm. Unfortunately, few days of checks gave nothing. After eliminating every subsystem from application infrastructure one by one only the database server was left.

“It has to be the database!”

The database server was quite powerful with 160 logical processors and 512 GB of Memory. Attached SAN storage also was very good with 0-2 ms of latency. According to logs, the server was never utilized in 100% so hardware was not our main concern…

The biggest problem with the investigation of the database were irregular occurrences of the freeze. Every time it occurred and we were called by the customer, the issue was already gone before we connected. That was irritating. We were never able to check what is happening on the system during the impact.

At that time this particular database server was not monitored by any SQL Server monitoring tool, so we didn’t have many logs describing server activity. We reviewed all available logs, scheduled jobs, and other regular activities to verify if anything could be involved. Unfortunately, it didn’t give us any new findings.

Finally, we decided to deploy some monitoring mechanism which would collect data and give as good situation overview for our after-the-facts analysis.

Diagnostic data collection

We decided to use PSSdiag. It’s a free data collection utility developed as a side project by Microsoft. It’s mainly used by Microsoft Customer Support Service and Product Support Service teams. It’s not the simplest tool to configure, but it collects all required information, like PerfMon counters, SQL Trace, error logs, and diagnostic data about SQL Server state, health, and its performance.

It took us a while to create a proper configuration because the volume of data being collected was huge. We also had to test it very well, because we couldn’t affect customer’s SQL Server performance. Finally, we ended up with the configuration which in this particular environment was collecting 0,5GB of data per minute (on average). Data retention was driven by fixed maximum data size because we didn’t want to consume all free space on one of SQL Server drives. Because of it, the collected time frame was varying from 1 hour up to max 2 hours. That gave us about 30 to 90 minutes to collect this data after the customer called us.

When the customer called us a few days later informing about another freeze occurrence, we had bad luck. Data from issue time period was already gone – overwritten by newer ones. “Fortunately”, two days later there was one more occurrence, which this time was captured properly. I copied this data out from the server and was ready for analysis.

Captured Data Analysis

To analyze data captured by PSSdiag I used a tool called SQLNexus. It’s another tool created as a side project at Microsoft. It processes the output from PSSdiag into a database and runs reports on top of it.

The result was worth all the configuration and testing efforts. At first glance, I knew that we had captured what we needed.

As you can see in above screenshot, something wrong happened around 16:05 and it lasted until 16:20. During this time period, the number of Started and Completed Batches dropped significantly and the average duration of execution increased. A large amount of Attentions was also noticeable. When I opened another report with blocking information everything became clear.

There was blocking situation that lasted for almost 15 minutes and blocked a total 15314 other SQL Server sessions! This is madness…

There was blocking situation that lasted for almost 15 minutes and blocked a total 15314 other SQL Server sessions! This is madness…

Blocking Chain Detail report revealed additional important information. One of our small, utility applications used to manage user data (let’s call it App02) was identified as a head blocker (SPID 137). The application was not used very often by the customer, which explained why freezes occurred in so non-deterministic way. However, most interesting information was hidden somewhere else… It’s the Transaction Name: implicit_transaction. What…?

Blocking Chain Detail report revealed additional important information. One of our small, utility applications used to manage user data (let’s call it App02) was identified as a head blocker (SPID 137). The application was not used very often by the customer, which explained why freezes occurred in so non-deterministic way. However, most interesting information was hidden somewhere else… It’s the Transaction Name: implicit_transaction. What…?

Let’s dig a little bit deeper and check what code App02 application executed.

Insert statement to the [dbo].[User] table was identified as the last query that our application executed. But why would an insert statement cause such behavior?

That bothered me a lot. I needed to know what happened in session 137 before this insert statement. Because I wasn’t able to find this kind of data in SQLNexus reports I decided to get it directly from the database. I had to spend some time to make myself familiar with the table structure created by ReadTrace tool from RML Utilities (used by SQLNexus during data import process). Finally, I developed bellow code snippet:

DECLARE @startTime datetime DECLARE @endTime datetime DECLARE @blockThresholdInMs int SET @startTime = '2014-07-08 15:00' SET @endTime = '2014-07-08 17:00' SET @blockThresholdInMs = 20000 --20sec -- Get blocking chain SELECT * FROM dbo.tbl_BLOCKING_CHAINS WHERE blocking_start BETWEEN @startTime AND @endTime AND max_wait_duration_ms > @blockThresholdInMs DECLARE @blockingStartTime datetime DECLARE @blockingEndTime datetime DECLARE @blockingSession int SELECT @blockingStartTime = blocking_start, @blockingEndTime = blocking_end, @blockingSession = head_blocker_session_id FROM dbo.tbl_BLOCKING_CHAINS WHERE blocking_start BETWEEN @startTime AND @endTime AND max_wait_duration_ms > @blockThresholdInMs -- Get connection info for blocking chain header SELECT * FROM ReadTrace.tblConnections WHERE Session = @blockingSession AND @blockingStartTime BETWEEN StartTime AND EndTime DECLARE @connectionStartTime datetime DECLARE @connectionEndTime datetime SELECT @connectionStartTime = StartTime, @connectionEndTime = EndTime FROM ReadTrace.tblConnections WHERE Session = @blockingSession AND @blockingStartTime BETWEEN StartTime AND EndTime -- Get statements executed by head blocker SELECT ub.NormText, ub.OrigText, * FROM ReadTrace.tblBatches b left join ReadTrace.tblUniqueBatches ub on b.HashID = ub.HashID WHERE session = @blockingSession AND StartTime BETWEEN @connectionStartTime AND @connectionEndTime order by b.BatchSeq -- Get additional events (like Exception) for this session in this time frame SELECT * FROM ReadTrace.tblInterestingEvents WHERE Session = @blockingSession AND StartTime BETWEEN @connectionStartTime AND @connectionEndTime AND Error IS NOT NULL

Maybe it’s not the most beautiful piece of code I wrote, but it did its job. It displays data about head blocker session, executed queries, and other interesting events (like exceptions) that could have taken place. Here are the results (click to enlarge):

You can see that after connecting to the database App02 application executed SET IMPLICIT_TRANSACTIONS ON, then executed one more SELECT statement and that’s it. Nevertheless, there is one more thing. At 16:03:52 an error 2601 was reported. It is the time when INSERT statement to the [dbo].[User] table was executed. So what is the error 2601? Some of you probably already know it. I didn’t, so I had to look for it in the documentation. It turned out that it is unique index violation. OK, let’s summarise what we already know:

- App02 connects to the database

- It executes some statements

- It SET IMPLICIT_TRANSACTIONS to ON

- Then it tries to insert data to table, but fail because of unique index violations

So far so good, but why the transaction was not rolled back?

“The problem is the code”

I had to look for answers to this question elsewhere. Namely in the application source code. It took me a while, but I was able to find c++ code that was responsible for this. Its simplified version looks that way:

GetDatabase()->BeginTrans();

TRY

{

result=Data::Put();

}

CATCH (CDBException, e)

{

ShowMessageBox(e->m_strError);

result=FALSE;

}

END_CATCH

if (result)

{

TRY

{

//Do sth else

}

CATCH (CDBException, e)

{

ShowMessageBox(e->m_strError);

result=FALSE;

}

END_CATCH

}

if (result)

GetDatabase()->CommitTrans();

else

{

GetDatabase()->Rollback();

}

Do you already see it? I hope you do. In case whenever something goes wrong an error message in a dialog window is displayed to the application user. The opened transaction is not rolled back until the user closes this window.

And this is exactly what happened. Application user wanted to create a new user in the system. Unfortunately, due to some constraints, this process failed because new entry he or she created was not unique. An error message was displayed, but the user didn’t close it immediately. Instead of this, he or she started to browse application user manual for additional information and problem resolution.

After this discovery, new application patch was ready the next day and immediately deployed to the customer environment.

Summary

Guys, remember to educate application developers and teach them to do not implement any unnecessary steps in the database transaction scope. Especially if they require user action or input. The better idea is to not open database transaction from the application code, but that’s another story. Next advice is to make yourself familiar with tools I mention in this post. All of them are free and half of them is open source and available on Github. If you cannot afford professional SQL Server monitoring tool that may be your salvation.

PS: That whole story reminds me of one tweet I saw recently

"What if I change this index"

The problem is the code

"If I update these statistics"

The problem is the code

"How about this index"

The problem is the code

"Can I use an INCLUDE"

The problem is the code

"I can't change the code"

The problem is the code— Grant Fritchey (@GFritchey) January 5, 2018

-Marek

Share it:T-SQL Tuesday #97 – My learning plan for 2018

This month’s TSQL2sday is hosted by Malathi Mahadevan (b|t) and the topic is about setting learning plan and goals for 2018. It is a perfect time to think how I would like to develop myself next year, what skills I should improve or what to change in my career path.

This month’s TSQL2sday is hosted by Malathi Mahadevan (b|t) and the topic is about setting learning plan and goals for 2018. It is a perfect time to think how I would like to develop myself next year, what skills I should improve or what to change in my career path.

I never used to make New Year resolutions. I never had such need, and never thought about it as a good idea. However, I also never considered it as a simple way to create a learning plan. A plan that I can pursue to become a better database specialist. A plan which will help me get rid of my inner procrastinator (Mr. P: “keep dreaming…”).

This month’s T-SQL Tuesday topic encouraged me to try… I sat down for a while and this is the plan I came up with… Continue reading “T-SQL Tuesday #97 – My learning plan for 2018”

Share it:T-SQL Tuesday #85 – Backup and Recovery – Maintenance Plans

This month’s topic for T-SQL Tuesday #85 post is: Backup and Recovery. I think this is very interesting topic and will result in many new great articles. I assume you already know what a backup is, and why it is very important to do and store backups for all your databases. The question which many database administrators ask themselves is how to do backups right? What tools to use?

This month’s topic for T-SQL Tuesday #85 post is: Backup and Recovery. I think this is very interesting topic and will result in many new great articles. I assume you already know what a backup is, and why it is very important to do and store backups for all your databases. The question which many database administrators ask themselves is how to do backups right? What tools to use?

There is a lot of possibilities:

- create own T-SQL scripts,

- use third-party scripts like Ola Hallengren solution,

- use PowerShell,

- create maintenance plans,

- … and probably a lot of more.

In my post for this month’s T-SQL Tuesday topic I decided to describe you, how you can leverage SQL Server built-in Maintenance Plans step-by-step. Continue reading “T-SQL Tuesday #85 – Backup and Recovery – Maintenance Plans”

Share it:In the 8 years I have been using SQL Server, we’re still dealing with a lack of cooperation

It ’s the first time when I’m participating in T-SQL Tuesday event. I’m so excited I can share my thoughts with you. This month’s topic is:

’s the first time when I’m participating in T-SQL Tuesday event. I’m so excited I can share my thoughts with you. This month’s topic is:

In the <N> years I have been using SQL Server, we’re still dealing with <some problem>

That’s very interesting topic and when I read Andy Mallon (b|t) post I already knew what I will write about. My version of this topic is:

In the 8 years I have been using SQL Server, we’re still dealing with a lack of cooperation.

During my career I’ve already had plenty of situations when a different teams which supposed to work on the same side, doesn’t do that in proper way.

My favorite example is when one team, like first level of Product Support Team or Customer Care Team works on database related issue. It doesn’t matter what kind of issue is that. It can be database slowness, query timeout, transactional log growth, etc. You can put here everything you would like or what you worked recently on. After all, when issue is somehow fixed, the case is handover to the second team, like third level of Database Administrators support, which should do a root cause analysis and close the case with a detailed explanation what exactly happened.

There is a catch!

In my case the first team doesn’t provide any input for a second team. They never collect a data for analysis during incident. I understand it in some stage. Customer calls them and wants to have an issue fixed as soon as possible. But on the other side, they are later obligated to provide an explanation what happened. Due to lack of detailed SQL Server knowledge they need to reach out to the more experienced and specialized team of Database Administrators. But even the most experienced Database Administrator is not able to provide issue root cause without analyzing data captured during this incident. Right? You’re probably wondering how such communication between teams may look like. Here you go:

“Dear DBA Team,

Today customer reported database slowness in XYZ environment. Issue was solved by first level support by failing over instance to the second cluster node. Please take a look on this and provide explanation what caused this issue.”

“Did you collect any data during incident?”

“Because of very limited time no data was collected. Please check server and provide explanation for customer. It’s very urgent.”

Oh come on, I already saw that too many times. It’s very frustrating when you have to explain again and again that you’re not able to provide any explanation because the first team doesn’t collect any valuable data. Unfortunately in this case you’re always the bad guy and they are good guys. They solved issue! Even if they had to restart SQL Server to solve long running query issue. You’re the database specialist which is not able to explain what happened.

What can be done to fix this situation?

Well… You can put a monitoring tool in place which will trace and capture everything what happens on SQL Servers you’re responsible on. Or… you can try to improve work culture and teach other teams they just need to collect data. Due to the environment limitations I had no choice and my team works on this second option. It’s up to you what will work better for you.

-Marek

Share it: